by Mark Adams, CUI Inc.

As the Internet-of-Everything puts pressure on Cloud services, software-optimized power conversion will help save energy and let operators keep on top of costs.

The Internet of Everything (IoE) will capture data continuously from activities such as retail, transportation, infrastructure management, manufacturing, mining, food production, security and many others. Figures from Cisco say a large retail store collects about 10 GB of data per hour and transmits 1 GB of that to a data center. An automated manufacturing site can generate about 1 TB of data per hour, of which about 5 GB may be stored, and a large mining operation can generate up to 144 TB per hour.

Cloud services will hold the key to transforming the data collected into useful information. But they will face enormous pressure to keep up with the explosion in data received from more and more IoE applications.

Energy is the most significant resource consumed by the data centers residing at the heart of today’s Cloud services. Over a typical lifetime of three years, the cost to power a server actually exceeds the equipment purchase price. It is also costly to run the cooling systems that maintain safe equipment-operating temperatures. Data center operators must minimize these expenses. The need to minimize these costs is driving major industry trends, such as siting new data centers in cooler climates and placing locations close to plentiful renewable sources of energy such as hydroelectric plants. Current favored locations include the U.S. Pacific Northwest and Scandinavia. Operators are also trying to establish higher maximum equipment operating temperatures to save on cooling costs.

However, operators also recognize the importance of treating the cause of heat generation: poor energy efficiency of data center equipment.

There is now a push to maximize energy efficiency of servers, power supplies and system-management software. It is worth noting, however, that peak power consumption is rising to meet demands for more computing capability. Typical server board consumption has risen from a few hundred watts to 2 or 3 kW today, and could reach 5 kW or more in the future. As a result, there is a growing difference between the server’s minimum power at light load and maximum full-load power. Power distribution architectures are becoming more flexible, with real-time adaptive capabilities, to maintain optimal efficiency under all operating conditions.

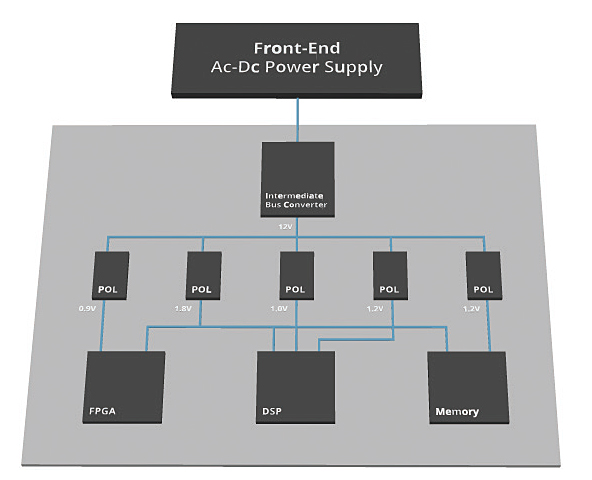

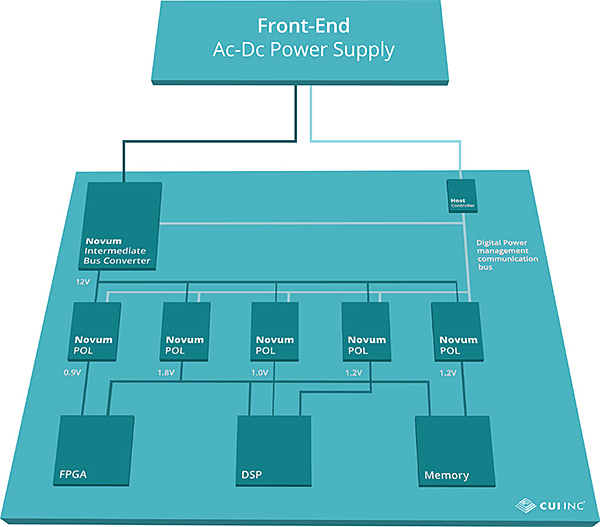

A typical distributed power architecture comprises a front-end ac-to-dc converter that delivers a 48-Vdc input to an Intermediate Bus Converter (IBC). The IBC provides a 12-V intermediate bus that supplies low-voltage, dc-to-dc point-of-load (POL) converters positioned close to major power-consuming components on the board, such as processors, system-on-chips or FPGAs. Multiple POLs may be used to supply core, I/O and any other voltage domains.

The 48-Vdc front-end output and 12-V intermediate bus voltage have been chosen to minimize down-conversion losses and losses proportional to current and distance when supplying typical server boards. But these fixed voltages are less suited to maintaining optimal efficiency given the changes in core voltage, current draw, maximum power and difference between full-load and no-load power. The ability to set different voltages, and change these dynamically in real-time, lets the system adapt continuously to optimize efficiency.

Take control with PMBus

The PMBus protocol provides an industry-standard framework for communicating with connected, digitally-controllable-power, front-end, intermediate, and point-of-load converters. A host controller can monitor the status of the converters and can send commands to optimize input and output voltages. This controller can also manage other aspects such as enable/disable, voltage margining, fault management, sequencing, ramp-up and tracking.

As system designers develop more effective ways to exploit the control PMBus brings, power architectures are becoming software defined and respond in real time to optimize efficiency. Some of today’s most powerful techniques for optimizing efficiency include Dynamic Bus Voltage (DBV) optimization, Adaptive Voltage Scaling (AVS), and multicore activation on demand.

DBV offers a means of adjusting the intermediate bus voltage dynamically to suit prevailing load conditions. At higher levels of server-power demand, PMBus instructions can command a higher output voltage from the IBC to reduce the output current and minimize distribution losses.

AVS is a technique used by high-performance microprocessors to optimize both the supply voltage and clock frequency. The point is to ensure processing demands are always satisfied with the lowest possible power consumption. AVS also enables automatic compensation for the effects of silicon process variations and changes in operating temperature. To support AVS, the PMBus specification has recently been revised to define the AVSBus, which allows a POL converter to respond to AVS requests from an attached processor.

Multicore activation-on-demand provides a means of activating or powering-down individual cores of a multicore processor in response to changes in load. Clearly, de-activating unused cores at times of low processing load can help gain valuable energy savings.

These are the first adaptive features to be implemented as power supply developers begin introducing software-defined power architectures. Many additional, powerful techniques are expected to emerge, assisted by the arrival of PMBus-compatible, front-end, ac-to-dc power supplies, such as the CUI PSE-3000 and PSA-1100 and Novum digital IBCs and non-isolated dc-to-dc digital POLs.

The continuous optimization of power-conversion architectures and bus voltages will yield better converters. Consider a power supply comprising a front-end ac-to-dc converter with average efficiency of 95%, an IBC operating at 93% and a POL operating at 88%. Here, an improvement of just 1% in each stage can reduce the power dissipated from 22.2% of the input power to 19.6%. This not only represents a 12% reduction in power losses, but also relieves the load on the data-center cooling system to deliver extra energy savings.

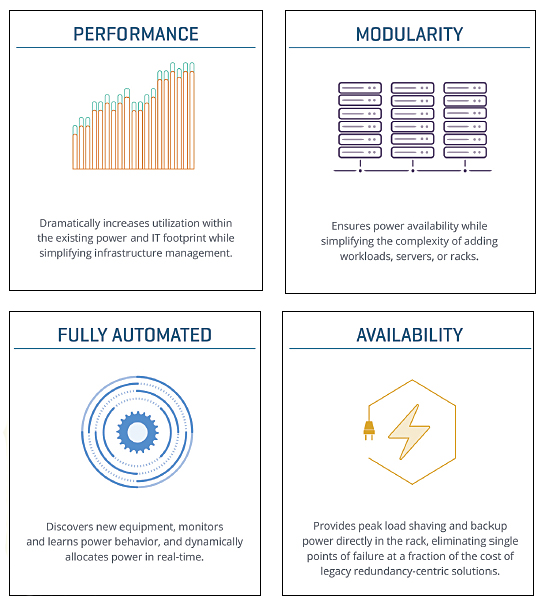

Underlying all these developments is an understanding of how data centers use power. Software can then intelligently provision and manage that power to realize significant energy savings. Such virtualization of the power infrastructure makes power an elastic resource and can improve usage by up to 50% within the existing power footprint. This not only means more efficient power consumption, but also avoids the capital expense of bringing additional, and unnecessary, resources into play. Virtual Power Systems, a company that is championing a concept called Software Defined Power, has recently partnered with CUI to extend its software into the hardware domain with an Intelligent Control of Energy (ICE) Block. The ICE will help manage power sources within data centers and similar ecosystems.

All in all, the Internet of Everything will feed huge quantities of data into the Cloud. This data must be processed quickly and stored for later reference. At the board level, energy lost during power conversion can be reduced by adjusting bus voltages as load conditions change. PMBus-compatible converters and real-time software-based control help stem these losses. At the system-level, optimized hardware and software will greatly improve power usage in data centers as capacity demands rise.

CUI Inc.

www.cui.com

Leave a Reply